Development Tools for ADAS Design

Automotive Technology Solutions

Image processing has achieved a real leap in quality as well as robustness through Deep Learning. Thus, object recognition can be performed in real time today. Our object detection and classification technology enables new use cases for driver assistance systems and autonomous driving.

The future for development of ADAS use cases: The pre-trained EYYESNET or new development through our optimized CNN Development & Training.

EYYESNET

The EYYESNET is a neural network for visual object recognition from images, covering the main traffic-related classes (person, bicycle/motorcycle, car, truck/bus, train/tram) for automotive and railroad solutions. The network architecture has been optimized for high precision and low power consumption, especially for use on embedded platforms.

EYYESNET can be used with traditional AI inference engines or with the powerful EYYES Layer Processing Unit. In a 5-year training process, more than 5 PB of real image data was used, as well as an additional more than 10 PB of synthetic data for a wide range of weather and environmental conditions. The continuous development and training of the network for further applications, objects and environmental conditions, ensures the users of our EYYESNET the constant improvement of their applications and solutions.

Recognition of:

• Pedestrians (adults, children, …)

• Cyclists

• Wheelchair users

• Cars

• Trucks

• Trains

• Traffic light signals

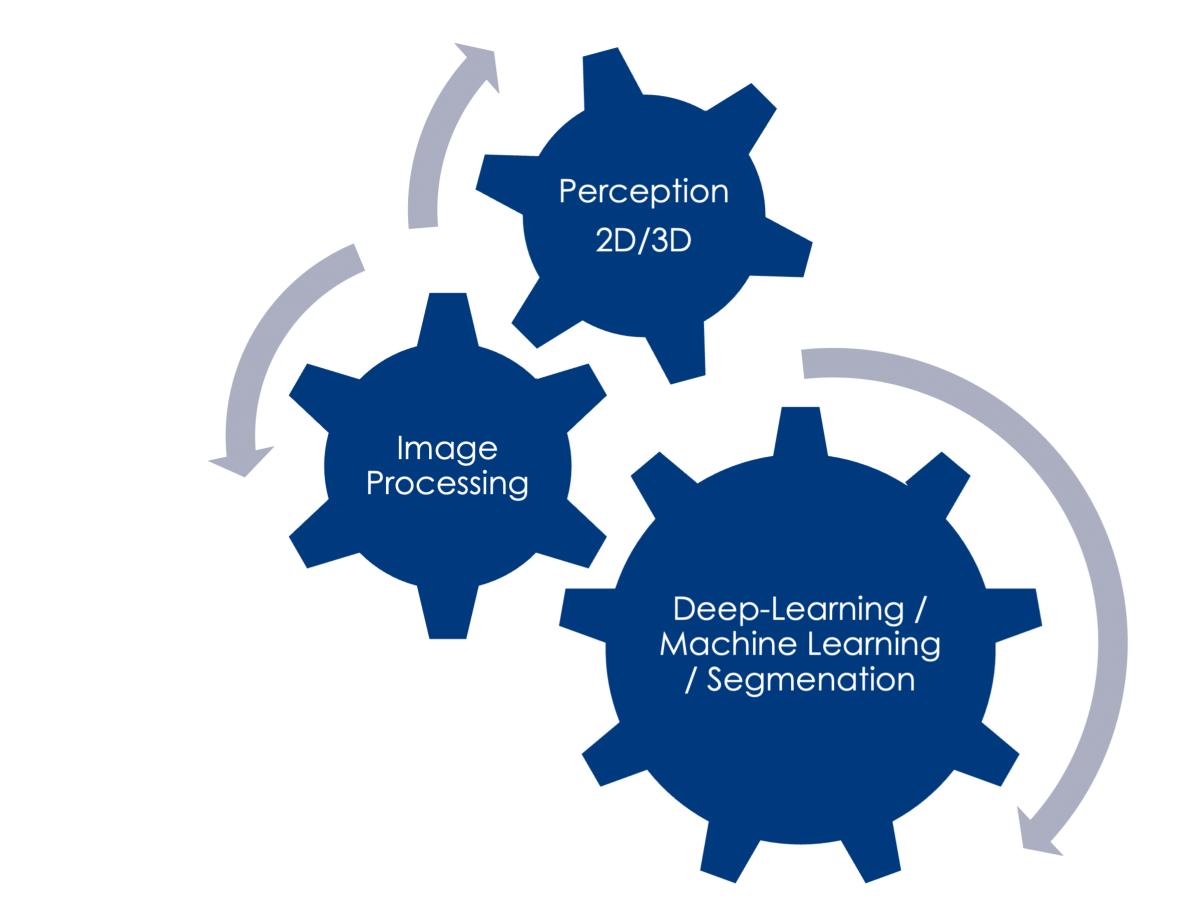

SIRIUS Software Framework

With the SIRIUS modular software framework from EYYES, it is possible to implement prototypes for video processing systems in a short time. Included are modules for Deep Learning, in which the EYYESNET or other neural networks can be used. These modules can be linked and parameterized as desired. This allows a wide range of different applications of the framework to be represented.

• Detection of the environment by means of 2D-3D estimation

• Image processing pipeline for resizing, rotation, enhancement, acquisition & morphology

• CNN calculation and segmentation

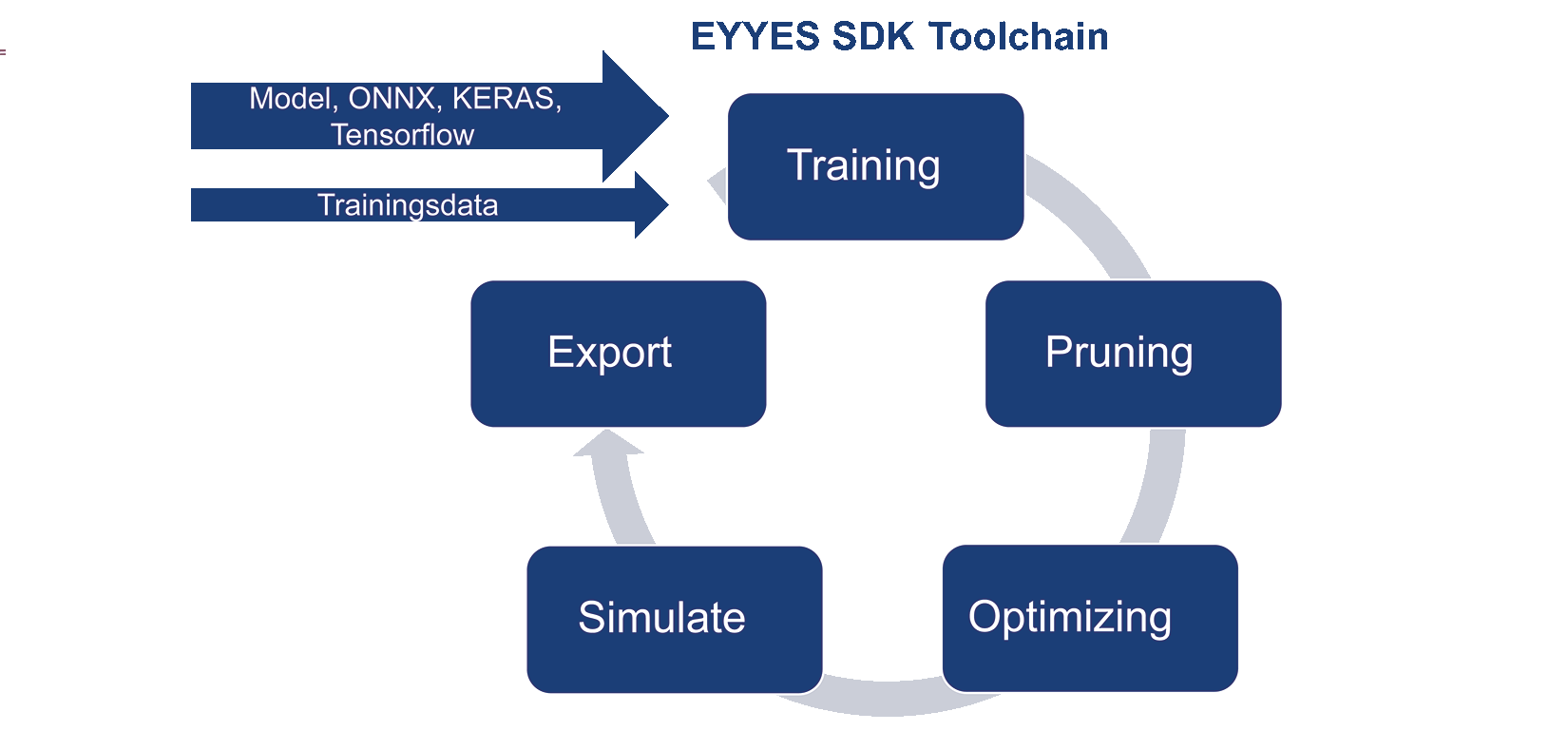

CNN optimization

With CNN optimization it is possible to reduce existing data by a factor of up to 40 without compromising the quality of the results. This service can be used individually and is not bound to a network type. The almost lossless reduction of the network makes it possible to solve complex tasks in an embedded system.

Contact us!