RailEye 3D

Mobility of the future

The demands on transport companies in terms of passenger numbers and the required train lengths, cycle frequencies and thus handling times are continuously increasing. Modern approaches attempt to speed up boarding and alighting by means of comfort functions with appropriate passenger guidance. It will be crucial for the train of the future to support the driver and also the staff with “intelligent” functions, such as automatic monitoring of the entire outside of the train, including all door areas.

The aim of this project is therefore the investigation and development of innovative 3D sensor systems which, as a driver assistance system, signal the status of the check-in and the area between the train and the platform edge by means of a red/green display in the ideal case and thus accelerate the check-in while at the same time complying with the required “safety” standards and operational regulations. Furthermore, safety is also to be further improved by monitoring the areas between the carriages with additional sensors, thus automatically recognising passengers and staff who are in this area.

RailEye 3D: Passenger detection on the platform

In the course of this research project, the project consortium wants to couple innovative 3D sensor systems with modern visual analysis and machine learning methods and evaluate them as a demonstrator system for safety-critical applications. Railway-specific standards and regulations must be taken into account as well as domain-specific functionalities. The following representative use cases will be considered:

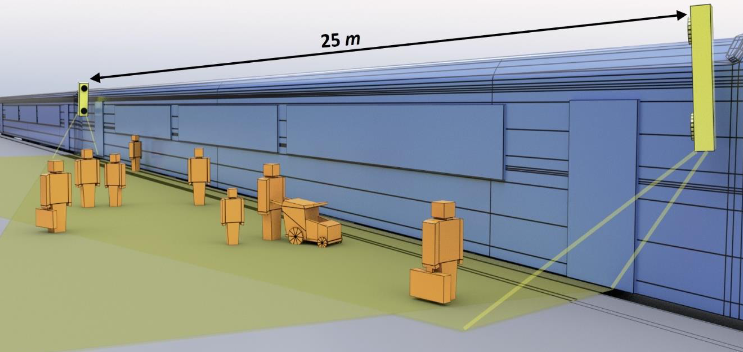

Monitoring of the entire outer skin of the train: The system is to use novel methods and procedures (analysis of 3D scene context in combination with deep-learning methods such as R-CNNs) as well as innovative sensor technology (AHDR sensor technology for optimal image quality analysis) to detect selected situations such as persons leaning against the train, standing in the gap between the train and the platform edge, pram in the gap, person is in the door space, object is in the danger space. The system is to be installed on a test vehicle for demonstration purposes and tested in a field trial.

Analysis of the state of the train, in order to let the driver handle the train segments in the form of a semi-automatic mechanism on the one hand and on the other hand to automatically check whether a person is still leaning on the door or against the vehicle or in the danger area. This algorithm is to be investigated and analysed together with the stakeholders of the OEBB to determine which functionalities such a future system will require.

Situation image or perception display of the entire length of the train with bird’s-eye view of the position of persons and objects in order to provide the driver with an optimal overview. This will enable the driver to better allocate information from a video image to a local position, especially in the case of regional and long-distance trains with long lengths, and to respond specifically to events.

Smarte Schiene: RailEye3D (GERMAN)

Smarte Schiene: RailEye3D (GERMAN)

Automatic self-handling RailEye3D

Project goals

Automatic detection of dangerous situations to support the driver by combining depth image calculation and image-based scene recognition with deep learning

- All doors and areas must be checked before dispatching

- Robust procedure for turbulent platform situations

- Crowds of people

- Weather and environmental influences

Monitoring between carriages

- Detect people in danger areas

- Increase in passenger safety

- Reduction of waiting times

- Relief of the train crew

Camera-based approach

- Overlapping FoV

- Deep Learning Object Detection

- 2D/3D Calibration

Generation of abstract Bird views

- Abstract representation of platform activity

- Dynamic risk assessment

- Minimum false negative rates

Camera-based approach

- Overlapping FoV

- Deep learning object detection

- 2D/3D calibration

Generation of abstract Bird views

- Abstract representation of platform activities

- Dynamic risk assessment

- Minimum false negative rates

Project partner